Introduction

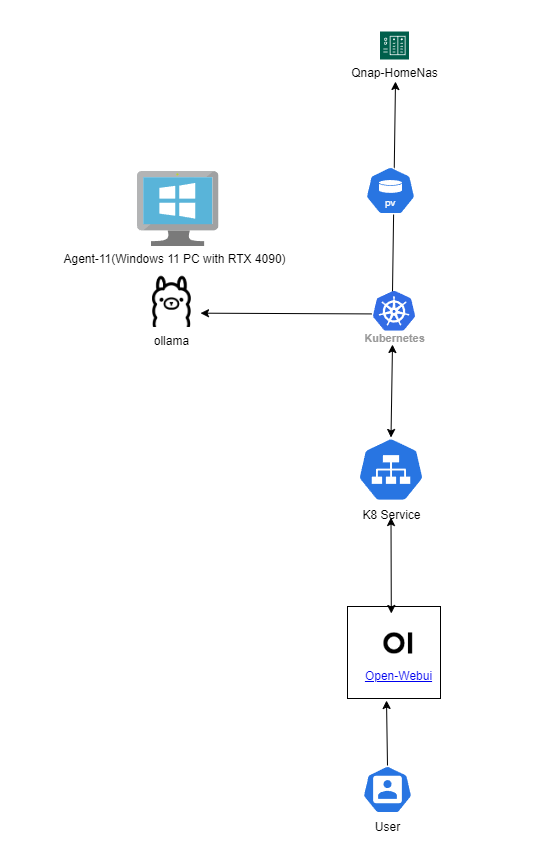

As technology continues to evolve, it's exciting to see how different components can come together to create something truly unique. In this blog post, I'll be sharing my experience setting up private LLM at home using Ollama Server, running on Windows 11 with an RTX 4090, in combination with a Qnap NAS box, and Kubernetes (K8s) running on an Intel NUC. We'll explore how these components work together to create a seamless and fun experience, all thanks to the power of Open-WebUI, ollama and the might llama3.

The Setup

To start, let's take a look at the setup I used for this project:

- Ollama Server: Running on Windows 11 with an RTX 4090

- Qnap NAS Box: An older model from Qnap, serving as a reliable NFS storage solution for persistent data toK8's.

- Intel NUC: A small but powerful device running Kubernetes (K8s) and acting as the central hub for my containerized applications.

- Open-Web UI: A simple web interface for managing and interacting with my containers, deployed in k8

Setup:

On the Windows-11 Desktop Conveniently called as Agent-11, I have Ollama installed and running as server

ollama pull llama3

ollama serveSince it's for fun along with some productivity as a byproduct, I still use Agent-11 for other activities like writing this blog, so divided running components across.

UI:

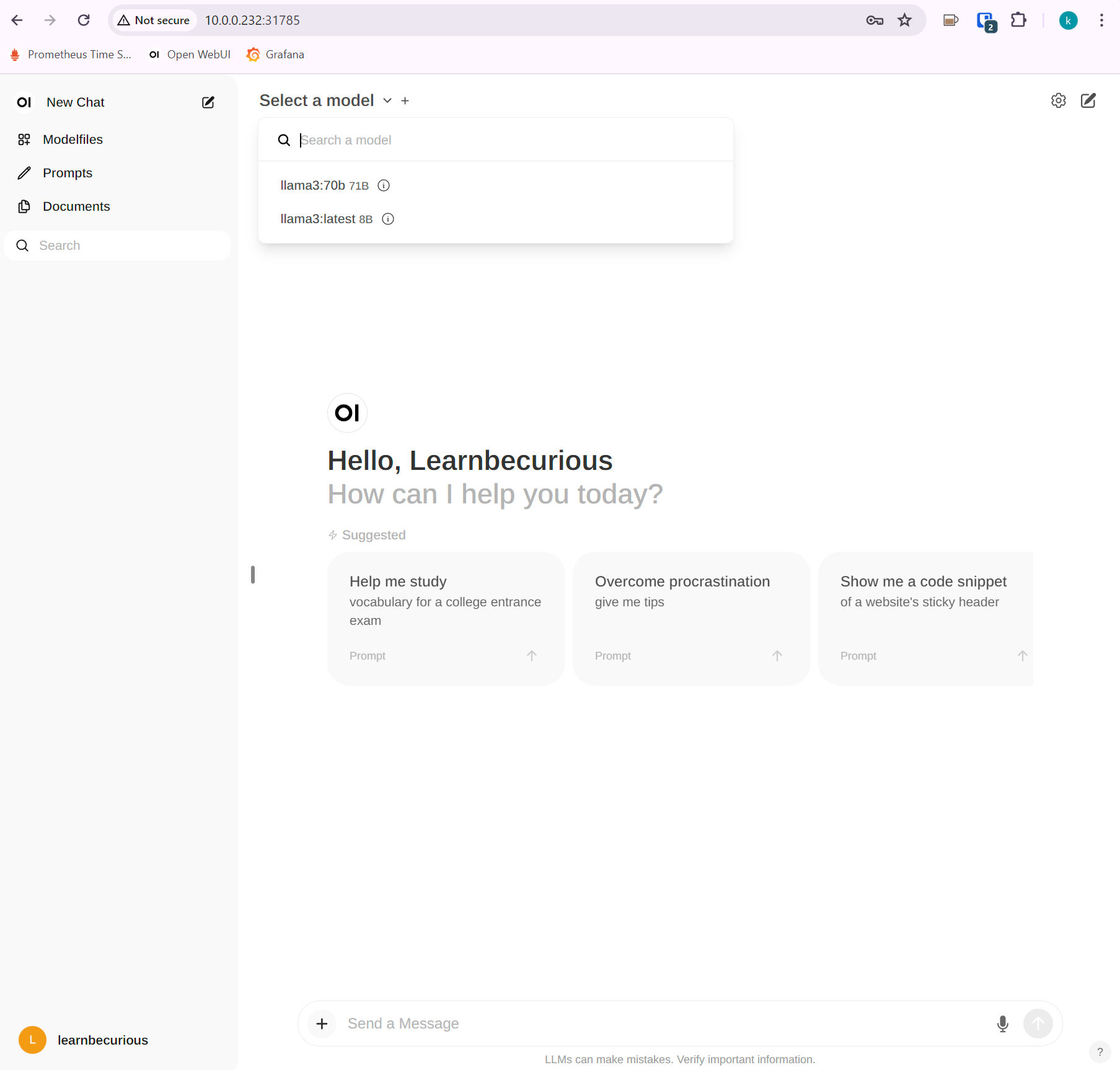

I was searching what's the best way to interact with Llama, through Ollama provides some native functionality, Found the awesome popular Open-WebUI and glad I spent some quick time exploring it, Deployment is fun and surprise is how quick i could deploy it to k8 and have it up and running in seconds.

With this YAML file, I was able to deploy my blog using the K8s command-line tool. The Ollama Server and Open-Web UI containers were successfully created and started.

---

apiVersion: v1

kind: Service

metadata:

name: open-webui

namespace: open-webui

spec:

type: NodePort

ports:

- port: 3000

targetPort: 8080

protocol: TCP

selector:

app: open-webui

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: open-webui

namespace: open-webui

spec:

replicas: 1

selector:

matchLabels:

app: open-webui

template:

metadata:

labels:

app: open-webui

spec:

containers:

- name: open-webui

image: ghcr.io/open-webui/open-webui:main

ports:

- containerPort: 8080

env:

- name: OLLAMA_BASE_URL

value: "http://10.0.0.155:11434"

restartPolicy: Always

Since I want to have the data to be persistent, I looked at some documentation and found some info that I am currently using to make data persistent (At least the login and previous asks were persistent).

I do have an 7+ year old Qnap HomeNAS that's been inactive for a while and just got recently powered on when I started to have some k8 fun, Created an NFS share and provided access to HomeLAN which the K8's are part of. I created a folder in that storage called as "open-webui"

1-open-webui-ns-pv-pvc.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: open-webui

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: open-webui-pv-nfs

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: nfs

nfs:

path: /k8-storage/open-webui

server: 10.0.0.129

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: open-webui-pvc-nfs-mount

namespace: open-webui

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: nfs # Must match the storageClassName in your PV2-open-webui.yaml

---

apiVersion: v1

kind: Service

metadata:

name: open-webui

namespace: open-webui

spec:

type: NodePort

ports:

- port: 3000

targetPort: 8080

protocol: TCP

selector:

app: open-webui

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: open-webui

namespace: open-webui

spec:

replicas: 1

selector:

matchLabels:

app: open-webui

template:

metadata:

labels:

app: open-webui

spec:

containers:

- name: open-webui

image: ghcr.io/open-webui/open-webui:main

ports:

- containerPort: 8080

env:

- name: OLLAMA_BASE_URL

value: "http://10.0.0.155:11434"

volumeMounts:

- name: open-webui-storage-volume

mountPath: /app/backend/data

restartPolicy: Always

volumes:

- name: open-webui-storage-volume

persistentVolumeClaim:

claimName: open-webui-pvc-nfs-mount

After Deploying above, I am just directly using Nodeport to access the Service

k8admin@k8-ct1:~$ k apply -f 1-open-webui-ns-pv-pvc.yaml

namespace/open-webui created

persistentvolume/open-webui-pv-nfs created

persistentvolumeclaim/open-webui-pvc-nfs-mount created

k8admin@k8-ct1:~$ k apply -f 2-open-webui.yaml

service/open-webui created

deployment.apps/open-webui created

k8admin@k8-ct1:~$ k get svc -n open-webui -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

open-webui NodePort 10.99.87.103 <none> 3000:31785/TCP 51s app=open-webuiRef-Diagram:

Once deployed, I could access Open-Web UI, create a User for login and validate it can load models from ollama and select a default model(in may case i have 2 models in ollama and I am using "llama3-8B"

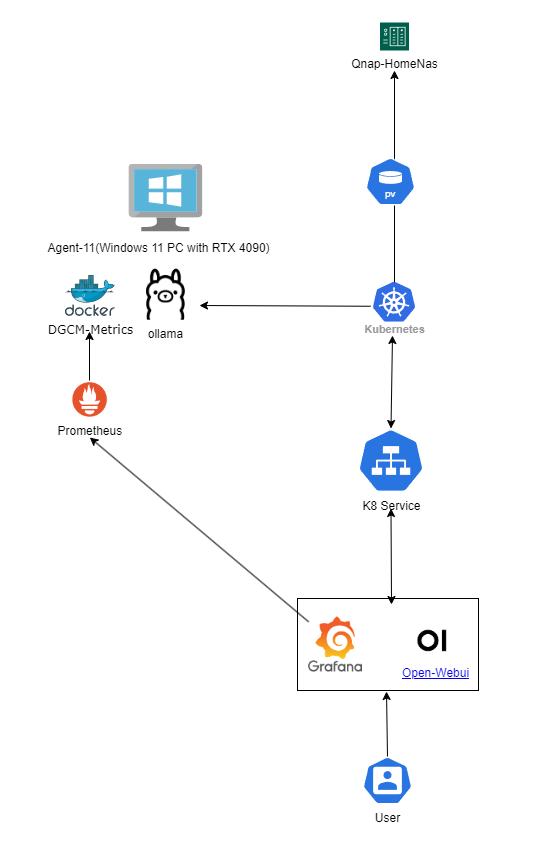

Now once everything is working and as I started to have some fun with llama3, I thought of how to make this more exciting, this is where I started looking on how to integrate metrics for my llama usage. Luckily I have Grafana running in my k8's(CrossRef: K8-Setup Link in future). So I explored some ways to export metrics, though it's not straightforward, once its up and running it's almost invisible and metrics are fun to see how GPU usage is directly proportional to using Open-Webui/ollama.

Ref-Diagram

Grafana Screenshot: The peaks are here I am asking llama3 for help.

References/Tools:-